Introduction to Kubernetes 2025

What is Kubernetes and what is Kubernetes used for?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a framework for running, organizing, and managing applications across a cluster of machines. With Kubernetes, developers can focus on writing code without worrying about the underlying infrastructure. It offers features like automatic scaling, self-healing, and load balancing, making it easier to deploy and manage applications at scale.

Purpose and Benefits of Kubernetes in Modern Computing

Kubernetes plays a vital role in modern computing by simplifying the management of complex application architectures. It enables organizations to deploy applications consistently across multiple environments, including on-premises data centers, public clouds, and hybrid setups. Some key benefits of Kubernetes include:

- Scalability: Kubernetes allows applications to scale up or down based on demand, ensuring optimal resource utilization. It automatically handles the distribution of workload across containers and nodes.

- High Availability: By distributing containers across multiple nodes, Kubernetes ensures that applications remain available even if some nodes fail. It detects failures and automatically restarts containers on healthy nodes.

- Portability: Kubernetes provides a platform-agnostic framework that allows applications to be deployed and managed consistently across any environment. It abstracts away the underlying infrastructure details, enabling developers to write code once and run it anywhere.

- Flexibility: Kubernetes supports a wide range of container runtimes and orchestrates multiple containers within a single application, facilitating microservices architecture and enabling better modularization of applications.

Historical Background and Evolution of Kubernetes

- Kubernetes was originally developed by Google and later donated to the Cloud Native Computing Foundation (CNCF), a community-driven organization focused on advancing cloud-native technologies. Starting as an internal project, Kubernetes was inspired by Google’s internal container orchestration system called Borg.

- It was first released to the public in 2014 and has since evolved into one of the most widely adopted container orchestration platforms. The Kubernetes community continues to innovate and enhance the platform, contributing to its rapid growth and establishment as the de facto standard for managing containerized workloads.

Docker vs Kubernetes

- While Kubernetes and Docker are often mentioned together, they serve different purposes. Docker is a popular platform for building and packaging containerized applications, whereas Kubernetes focuses on orchestrating and managing those containers at scale.

- Docker provides the tools and infrastructure to create lightweight, isolated containers that encapsulate an application and its dependencies. It enables developers to package an application once and run it consistently across different environments.

- Kubernetes, on the other hand, provides the infrastructure for deploying and managing those containers on a cluster of machines. It ensures that containers run reliably, scales applications as needed, and handles network routing, load balancing, and other operational tasks. Kubernetes can orchestrate containers created by Docker, as well as other container runtimes.

Key Concepts and Terminology in Kubernetes

Nodes and Kubernetes Cluster

In Kubernetes, a node is an individual machine (physical or virtual) that serves as a worker machine. Each node runs multiple containers, and together, they form a Kubernetes cluster. A cluster typically consists of multiple nodes for scalability and fault tolerance. Nodes communicate with each other and the master node to coordinate container placement, scheduling, and management.

Containers and Kubernetes Pod

Containers are lightweight, isolated environments that contain all the necessary software dependencies to run a specific application. In Kubernetes, a pod is the smallest unit of deployment, representing one or more closely related containers that share resources and network namespace. Pods are scalable, and Kubernetes schedules them onto nodes based on resource availability and other constraints.

Kubernetes Service and Kubernetes Deployment

A Kubernetes service is an abstraction that defines a logical set of pods and a policy for accessing them. It provides a stable network endpoint for accessing the pods, regardless of their dynamic nature. Services enable load balancing and service discovery within a cluster, essential for creating resilient and highly available applications.

A Kubernetes deployment is a higher-level object that manages the deployment and scaling of a set of pods. It provides a declarative way to define desired state, automatically creating and updating pods to reach the desired number of replicas. Deployments help ensure application availability, handle rolling updates, and allow easy rollbacks if necessary.

Replication Controllers and Replica Sets

Replication controllers and replica sets are previous versions of the deployment object and are still used in older Kubernetes installations. They are responsible for maintaining a specified number of pod replicas, ensuring that the desired number of pods is always running in the cluster. Replica sets are more flexible and offer more advanced selectors and matching capabilities compared to replication controllers.

Understanding of Kubernetes Architecture

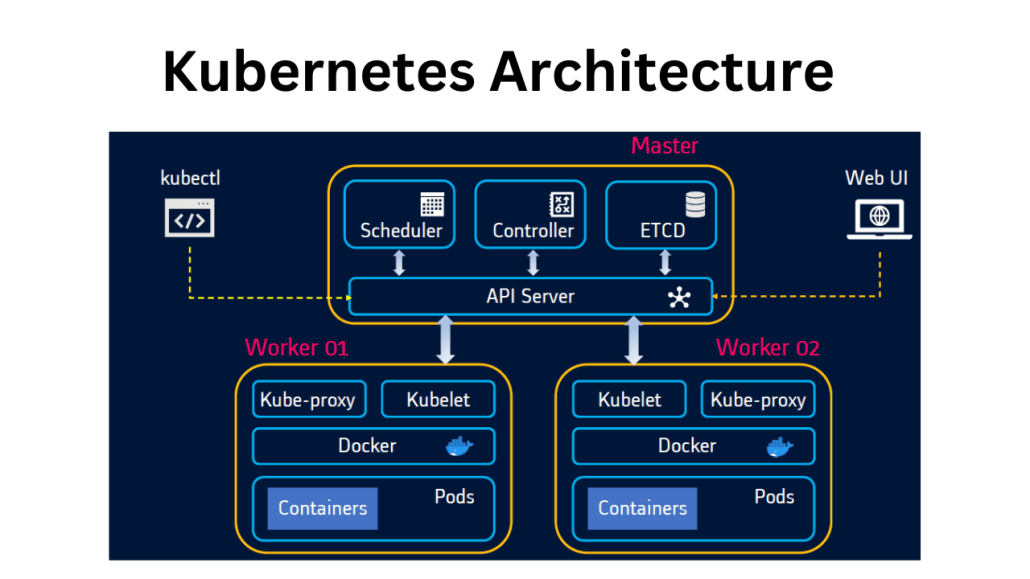

Control Plane

The control plane is the brain behind Kubernetes, responsible for managing and controlling the cluster. It consists of several components, each with a specific role:

kube-apiserver

The kube-apiserver serves as the front-end to the Kubernetes control plane. It exposes the Kubernetes API, which is used by clients to interact with the cluster. All the operations such as creating, updating, and deleting resources are processed by the kube-apiserver. It validates and processes incoming API requests and updates the desired state of the cluster.

etcd

etcd is a distributed key-value store that serves as the source of truth for the cluster’s configuration data. It stores crucial information like cluster state, configurations, and metadata. etcd ensures data consistency and high availability, making it a critical component of the control plane.

kube-scheduler

The kube-scheduler is responsible for assigning pods to available worker nodes based on resource requirements, node capacity, and scheduling policies. It takes into account factors like pod affinity/anti-affinity, resource constraints, and zone spread to make intelligent scheduling decisions. The kube-scheduler ensures optimal utilization of cluster resources and load balancing.

kube-controller-manager

The kube-controller-manager runs various controllers that are responsible for maintaining the desired state of the cluster. It includes controllers for nodes, endpoints, services, and more. The controllers constantly monitor the cluster and take corrective actions to ensure the system remains in the desired state. For example, the node controller detects and reacts to node failures by rescheduling affected pods on healthy nodes.

Worker Nodes

The worker nodes are the machines where containers are actually deployed and run. They form the data plane of the Kubernetes architecture and are responsible for executing the workload. Each worker node consists of the following components:

kubelet

The kubelet is the primary agent running on each worker node. It communicates with the control plane and manages the pod lifecycle. The kubelet ensures that the containers described in the pod specification are running and healthy. It also takes care of node-specific tasks like attaching storage volumes and pulling container images.

kube-proxy

The kube-proxy is a network proxy that runs on each worker node. It is responsible for load balancing traffic between services within the cluster. It maintains network rules and handles network routing for service discovery and communication. The kube-proxy enables seamless communication between pods and services, regardless of the underlying network configuration.

Container Runtime

The container runtime is responsible for running containerized applications on each worker node. Kubernetes supports various container runtimes, including Docker, containerd, and CRI-O. The container runtime pulls container images, creates and manages containers, and provides isolation and resource management.

Future Trends and New Features in Kubernetes

As technology continues to evolve, the Kubernetes ecosystem is not left behind. Exciting trends and advancements promise to elevate container orchestration to new heights. Let’s explore some key developments and features:

A. Key Trends and Advancements in Kubernetes Ecosystem

- Cluster Federation: Kubernetes is advancing towards managing multiple clusters effortlessly. Cluster Federation allows the centralized management of distributed clusters, enabling enhanced scalability and load balancing.

- Serverless Containers: Combining the benefits of serverless computing with containerization, Kubernetes is embracing serverless containers. This trend enables automatic scaling and resource management, optimizing efficiency and reducing costs.

- Machine Learning Integration: Kubernetes is expanding its footprint in the machine learning domain. By integrating with popular ML frameworks like TensorFlow and PyTorch, Kubernetes empowers data scientists and engineers to seamlessly deploy and manage ML workflows.

- Enhanced Security: Security is a paramount concern in containerized environments. Kubernetes is addressing this by introducing advanced security features, such as container runtime sandboxes and improved network policies, guaranteeing a robust and secure infrastructure.

B. Exploring Kubernetes as a Service (KaaS)

Kubernetes as a Service, or KaaS, is an emerging concept that simplifies the management and deployment of Kubernetes clusters. It allows users to focus on their applications without worrying about the underlying infrastructure. Some key features of KaaS include:

- Easy Cluster Provisioning: With KaaS, users can effortlessly provision Kubernetes clusters with just a few clicks. This eliminates the need for manual cluster setup and configuration, saving time and effort.

- Seamless Scalability: KaaS providers offer hassle-free scalability, enabling users to scale their applications effortlessly. This flexibility ensures that your application can handle increasing demands without any downtime or performance degradation.

- Automated Upgrades: KaaS platforms handle Kubernetes upgrades automatically, ensuring that you have access to the latest features and bug fixes without the need for manual intervention. This eliminates the complexity of upgrading the cluster yourself.

FAQs (Frequently Asked Questions) about Kubernetes

To help address common queries about Kubernetes, here are some frequently asked questions:

A. What is Kubernetes vs. Docker?

Kubernetes and Docker are complementary technologies. While Docker provides containerization capabilities, Kubernetes focuses on orchestration and management of containers, scaling, networking, and fault tolerance.

B. How does Kubernetes handle high availability and failover?

Kubernetes achieves high availability by distributing application workloads across multiple nodes and automatically recovering from failures. It monitors the health of nodes and containers, reallocating resources when needed to maintain desired states.

C. What are the alternatives to Kubernetes for container orchestration?

Besides Kubernetes, alternative container orchestration solutions include Docker Swarm, Apache Mesos, and Red Hat OpenShift. Each option has its own unique features, and the choice depends on specific requirements and preferences.

D. What is Kubernetes used for?

Kubernetes is used for container orchestration, providing a scalable and resilient platform for deploying, managing, and scaling applications. It automates many operational tasks, simplifying the process of running containerized workloads.

E. What is a pod in Kubernetes?

In Kubernetes, a pod is the smallest unit of deployment. It represents a logical group of one or more containers that share the same network and storage resources, ensuring pods work closely together to achieve a specific task.

F. What are some common challenges when migrating applications to Kubernetes?

Migrating applications to Kubernetes may present challenges, including refactoring applications to be container-ready, redesigning network architecture to fit the Kubernetes model, and ensuring data persistence and storage connectivity across the cluster.

G. How does Kubernetes handle rolling updates and backward compatibility?

Kubernetes supports rolling updates, which allows for seamless deployment of application updates without downtime. Backward compatibility is maintained by specifying version policies and keeping multiple versions of containers during the update process.

H. Is it possible to run Kubernetes on bare metal servers instead of cloud providers?

Yes, Kubernetes can be run on bare metal servers in addition to cloud providers. This allows organizations to have greater control over their infrastructure and leverage existing hardware investments while still benefiting from Kubernetes’ orchestration capabilities.

By learning more about Kubernetes through these FAQs, you’ll have a better understanding of its functionalities and capabilities for your containerized applications.