Docker Tutorial: Introduction to Docker

What is Docker?

In this blog we will see docker tutorial, Docker is an open-source platform that allows developers to automate the deployment and management of applications within lightweight, virtualized containers. These containers package software and its dependencies into a standardized unit, enabling applications to run consistently across different environments.

Benefits of Docker

- Portability: Docker containers can be deployed on any system running a compatible Docker runtime, providing consistent behavior regardless of the underlying infrastructure.

- Scalability: Docker enables easy scaling of applications by allowing multiple instances of containers to run concurrently.

- Resource Efficiency: Containers share the host operating system’s kernel, resulting in reduced overhead and efficient resource utilization.

- Isolation: Docker containers provide a level of isolation, ensuring that applications do not interfere with each other.

- Rapid Deployment: With Docker, applications can be packaged as a single unit, simplifying the deployment process and reducing deployment time.

- Version Control: Docker enables versioning and rollbacks, allowing developers to easily manage and revert to previous versions of applications.

Docker Tutorial: Getting Started with Docker

Installation

To get started with Docker, you need to install it on your operating system. Here are the steps for installation on different platforms:

1. Installing Docker on Windows

To install Docker on Windows, follow these steps:

- Visit the Docker website and download the Docker Desktop installer.

- Double-click on the installer and follow the on-screen instructions to complete the installation.

- Once installed, Docker can be accessed from the Windows Start menu.

2. Installing Docker on macOS

To install Docker on macOS, follow these steps:

- Go to the Docker website and download the Docker Desktop installer for macOS.

- Open the downloaded file and drag the Docker.app icon to the Applications folder.

- Launch Docker.app from the Applications folder to start Docker.

3. Installing Docker on Linux

The process of installing Docker on Linux varies depending on the distribution. Refer to the official Docker documentation for detailed instructions on how to install Docker on your specific Linux distribution.

Docker Concepts

Before diving into Docker, it’s important to understand some fundamental concepts:

1. Containers vs. Virtual Machines

Containers and virtual machines (VMs) are both isolation mechanisms, but they differ in their approach. While VMs virtualize the entire operating system, containers virtualize the operating system on top of the host kernel, allowing for more lightweight and efficient deployments.

2. Images and Docker Registry

In Docker, an image is a lightweight, standalone, executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, environment variables, and configuration files. Docker images are stored in a repository called the Docker Registry, which can be a public registry like Docker Hub or a private registry.

3. Containerization and Microservices

Containerization is the process of encapsulating an application and its dependencies into a container. This approach allows for easy deployment and management of applications. Microservices, on the other hand, is an architectural style where applications are broken down into small, loosely-coupled services that can be independently developed and deployed. Docker plays a crucial role in enabling microservices architecture by facilitating easy containerization and deployment of individual services.

Working with Docker Containers

Running Containers

To run containers in Docker, you need to perform the following steps:

1. Pulling Docker Images

Before running a container, you need to pull the corresponding Docker image from a registry. Use the docker pull command followed by the image name and tag to download the image to your local machine.

2. Running a Container

To run a Docker container, use the docker run command followed by the desired image name and any necessary options or parameters. This will start a new container instance based on the specified image.

Managing Containers

Once containers are running, you may need to manage them effectively. Docker provides several commands for container management:

1. Starting and Stopping Containers

To start a stopped container, use the docker start command followed by the container ID or name. Similarly, to stop a running container, use the docker stop command.

2. Restarting Containers

To restart a container, use the docker restart command followed by the container ID or name. This will stop and start the container in a single command.

3. Pausing and Resuming Containers

To temporarily pause a running container, use the docker pause command followed by the container ID or name. To resume a paused container, use the docker unpause command.

4. Removing Containers

To remove a container, use the docker rm command followed by the container ID or name. This will permanently delete the container and its associated resources.

Interacting with Containers

Docker provides various ways to interact with running containers:

1. Accessing Container Shell

To access the shell of a running container, use the docker exec command followed by the container ID or name and the desired shell command. This allows you to execute commands within the container’s context.

2. Copying Files to/from Containers

To copy files to or from a container, use the docker cp command along with the source and destination paths. This allows you to transfer files between the host and the container.

3. Attaching and Detaching from Containers

To attach to a running container and interact with its console, use the docker attach command followed by the container ID or name. To detach from a container without stopping it, press Ctrl + P followed by Ctrl + Q.

Networking and Linking Containers

Docker provides several mechanisms for container networking and linking:

1. Container Port Mapping

To expose a container’s port to the host system, use the -p option followed by the host port and container port when running the container. This enables communication between the container and the host or other containers.

2. Creating Custom Networks

Docker allows you to create custom networks using the docker network create command. Custom networks provide isolation and allow containers to communicate with each other using a consistent and predictable network environment.

3. Container Linking

Container linking allows you to connect multiple containers together, enabling them to communicate with each other through the use of environment variables. Use the --link option when running a container to link it with another running container.

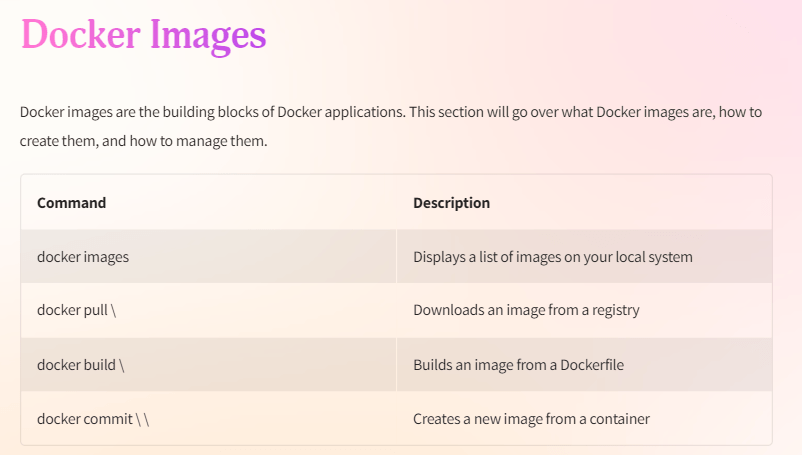

Docker Images and Dockerfile

Creating Docker Images

To create custom Docker images, you can use a Dockerfile, which is a text file that contains instructions for building an image. Follow these steps:

1. Building Images from Dockerfile

Create a Dockerfile and use the docker build command, specifying the path to the Dockerfile. Docker will execute the instructions in the Dockerfile to build the image.

2. Pushing Images to Docker Registry

To publish your custom Docker image to a registry, use the docker push command followed by the image name and tag. This allows others to access and use your image.

Dockerfile Directives

A Dockerfile consists of several directives that define the image’s build process:

1. FROM

The FROM directive specifies the base image to use for the subsequent build steps. It is the starting point for building your image.

2. RUN

The RUN directive allows you to execute commands within the image during the build process. Use it to install dependencies, set up configurations, or perform other necessary tasks.

3. COPY

The COPY directive copies files and directories from the build context or the specified source path to the image’s file system.

4. EXPOSE

The EXPOSE directive informs Docker that the container listens on the specified network ports at runtime. It does not publish the ports by default; you need to use the -p flag when running the container.

5. CMD and ENTRYPOINT

The CMD and ENTRYPOINT directives specify the command to run when the container starts. The CMD directive provides defaults that can be overridden, while the ENTRYPOINT directive specifies the main command and its options.

6. Using ENV Variables

You can define environment variables in a Dockerfile using the ENV directive. These variables can then be accessed within the container, allowing you to define dynamic configuration values.

Optimizing Images

To optimize Docker images, consider the following techniques:

1. Image Layers

Docker images are composed of multiple layers. Try to minimize the number of layers in your image by combining related instructions and removing unnecessary intermediate layers. This helps reduce image size and improves build and deployment times.

2. Using .dockerignore

Create a .dockerignore file in your project directory to specify files and directories that should be excluded from the image build process. This prevents unnecessary files from being included in the image, reducing its size.

3. Multi-stage Builds

Use multi-stage builds to create smaller and more efficient images. This technique involves using different base images for different stages of the build process, allowing you to copy only the necessary artifacts into the final image.

Docker Compose

Introduction to Docker Compose

Docker Compose is a tool that allows you to define and manage multi-container Docker applications using a YAML file. It simplifies the process of running multiple containers and their dependencies.

Docker Compose YAML File

The Docker Compose YAML file provides a declarative way to define and configure your application’s services, networks, and volumes. It consists of the following sections:

1. Services

The services section defines the different containers that make up your application. Each container is defined as a separate service and includes configuration options such as the image to use, ports to expose, and dependencies.

2. Networks

The networks section allows you to define custom networks for your application. Networks enable communication and isolation between containers.

3. Volumes

The volumes section specifies the volumes to be mounted in the containers. Volumes allow for persistent storage and data sharing between containers.

Running Multiple Containers with Docker Compose

To start multiple containers defined in a Docker Compose file, follow these steps:

1. Starting and Stopping Services

Use the docker-compose up command to start all services defined in the Docker Compose file. To stop the services, press Ctrl + C.

2. Scaling Containers

Docker Compose allows you to scale services by specifying the desired number of instances. Use the --scale option followed by the service name and desired count to scale the containers.

Environment Variables

Docker Compose supports environment variables, allowing you to configure services based on different environments. There are two ways to use environment variables:

1. Using .env Files

Create a .env file in the same directory as the Docker Compose file and define key-value pairs. Docker Compose automatically reads the variables from this file and makes them available to the services.

2. Variable Substitution

In the Docker Compose file, you can use environment variable references in the format $VARIABLE_NAME or ${VARIABLE_NAME}. Docker Compose replaces these references with the respective values during the service configuration.

Docker Swarm

Understanding Docker Swarm

Docker Swarm is a native clustering and orchestration solution provided by Docker. It allows you to create and manage a swarm of Docker nodes, forming a distributed system for deploying and scaling applications.

Setting Up a Swarm Cluster

To set up a Docker Swarm cluster, follow these steps:

1. Manager Nodes

Choose one or more machines to act as manager nodes. Initialize the swarm on one of these nodes using the docker swarm init command. This node will become the Swarm manager.

2. Worker Nodes

After initializing the swarm, you can add worker nodes to the cluster using the docker swarm join command. These nodes will execute tasks and distribute containers across the swarm.

Deploying Services in a Swarm

To deploy services in a Docker Swarm cluster, perform the following steps:

1. Creating a Service

Use the docker service create command to create a service. Specify the image, desired replicas, and other necessary configurations. Docker Swarm will schedule the service across the available swarm nodes.

2. Scaling Services

To scale a service, use the docker service scale command followed by the service name and desired replica count. Docker Swarm automatically redistributes tasks among the swarm nodes.

3. Updating Services

To update a service, use the docker service update command followed by the service name and required configuration changes. Docker Swarm will perform a rolling update, ensuring high availability of the application during the update process.

Swarm Networking

Docker Swarm provides several networking features that simplify communication between nodes and services:

1. Overlay Networks

Overlay networks in Docker Swarm allow for multi-host communication between containers. They provide a secure and isolated network fabric for containers running on different swarm nodes.

2. Load Balancing with Ingress Network

The ingress network enables external access to services deployed in the swarm. It acts as a load balancer, distributing incoming traffic to the appropriate services.

3. Service Discovery

Docker Swarm provides an integrated DNS server that allows services to discover and communicate with each other using their service names. This simplifies communication within the cluster.

Monitoring and Logging in Docker

Container Monitoring

Monitoring Docker containers is crucial for understanding resource usage and performance. Docker provides various tools for container monitoring:

1. Docker Stats

The docker stats command displays live CPU, memory, and network usage statistics for running containers. It allows you to monitor resource consumption and identify performance bottlenecks.

2. Using cAdvisor

Container Advisor (cAdvisor) is an open-source tool that provides detailed resource usage and performance statistics for Docker containers. It collects and exports metrics in real-time, facilitating container monitoring and analysis.

Log Management

Docker allows you to manage container logs efficiently using different logging drivers:

1. Docker Logging Drivers

Docker supports various logging drivers, such as json-file, syslog, and journald. These drivers enable you to customize where and how container logs are stored.

2. Centralized Logging

To centralize container logs, you can integrate Docker with logging aggregation systems like ELK Stack (Elasticsearch, Logstash, and Kibana) or cloud-based logging services. Centralized logging helps in analyzing logs across multiple containers and simplifies troubleshooting.

Docker Security

Container Isolation

Docker uses several underlying technologies to provide container isolation, including namespaces and control groups (cgroups). These technologies ensure that containers are sandboxed and do not access or interfere with each other’s resources.

Docker Security

Containerization has revolutionized the way applications are deployed and managed. Docker, a popular containerization platform, has gained significant traction due to its flexibility and ease of use. However, ensuring the security of Docker containers is of utmost importance to protect sensitive data and prevent unauthorized access. In this section, we will delve into Docker security best practices and explore the tools and techniques to enhance the overall security of your Docker environment.

A. Container Isolation

One of the key aspects of Docker security lies in container isolation. Docker utilizes containerization technology to create isolated environments for applications, ensuring that they operate in their self-contained and independent spaces. By isolating each container, Docker provides a level of security that prevents issues within one container from affecting others. This granular level of isolation enhances the overall security of the application infrastructure.

B. Docker Security Best Practices

Implementing robust security practices is crucial for protecting your Docker environment from potential threats. Let’s explore some best practices that can bolster your Docker security posture:

1. Securing Docker Daemon

The Docker daemon acts as the core component in the Docker architecture and must be secured to prevent unauthorized access. Start by configuring secure communication channels, such as using TLS certificates to enable encrypted communication between Docker clients and the daemon. Additionally, restrict access to the daemon’s TCP socket by utilizing strong authentication mechanisms, such as mutual TLS authentication or token-based authentication.

2. Securing Container Images

Container images serve as the building blocks for Docker containers, and securing these images is fundamental to maintaining a secure Docker environment. It is essential to ensure that the base images you utilize are from trusted sources and have not been tampered with. Regularly update and patch these images to address any vulnerabilities that may arise. Employing vulnerability scanning tools can help identify potential security flaws in container images and proactively mitigate any risks.

3. User and Permissions Management

Enforcing strict user and permissions management within Docker is crucial for preventing unauthorized access and maintaining data integrity. Implement the principle of least privilege by granting only the necessary permissions and privileges to each user or service. Utilize Docker’s built-in access control mechanisms, such as user namespaces and access control lists (ACLs), to restrict access to sensitive resources within containers. Regularly review and audit user permissions to detect and address any unnecessary privileges.

Docker Orchestration Tools

Docker orchestration is the process of managing, scaling, and automating the deployment of Docker containers. While Docker itself provides basic orchestration capabilities, utilizing dedicated orchestration tools can significantly enhance the management of complex container environments. Let’s explore some popular Docker orchestration tools and their features.

A. Kubernetes

1. Introduction to Kubernetes

Kubernetes, also known as K8s, is an open-source container orchestration platform that automates container deployment, scaling, and management. It provides a robust and scalable foundation for running containerized applications, offering features like automatic scaling, self-healing, and load balancing. Kubernetes simplifies the management of containerized applications by abstracting away the underlying infrastructure and providing a consistent environment.

2. Docker and Kubernetes Integration

Docker seamlessly integrates with Kubernetes, enabling efficient container management within Kubernetes clusters. Docker images can be deployed and orchestrated using Kubernetes declarative YAML manifests, allowing for easy replication, scaling, and load balancing across multiple containers. Kubernetes provides extensive support for Docker, making it a powerful choice for managing complex containerized applications.

B. Other Orchestration Tools

Apart from Kubernetes, several other Docker orchestration tools offer diverse features and capabilities. Let’s explore a few notable ones:

1. Nomad

Nomad, developed by HashiCorp, is a flexible and lightweight container orchestration tool. It supports multiple schedulers and provides a unified interface for managing a variety of workloads. Nomad seamlessly integrates with Docker and offers features like dynamic scaling, automatic workload placement, and advanced scheduling capabilities.

2. Apache Mesos

Apache Mesos is an open-source distributed systems kernel that enables efficient resource management and scheduling of containerized applications. With its fine-grained resource allocation and multi-tenancy support, Mesos provides a robust platform for managing Docker containers at scale. Mesos also integrates with Docker, offering advanced features like high availability and seamless workload migration.

3. Amazon ECS

Amazon Elastic Container Service (ECS) is a fully managed container orchestration service offered by Amazon Web Services (AWS). It simplifies the deployment and management of Docker containers on AWS infrastructure. ECS provides features like automatic scaling, load balancing, and integration with other AWS services, making it a convenient choice for running Docker containers in the cloud.

Summary

In this comprehensive Docker tutorial, we have covered various aspects of Docker, ranging from basic concepts to advanced security practices and orchestration tools. Let’s briefly recap the key points covered:

A. Recap of Docker Concepts: We explored the fundamentals of Docker, including containerization, Docker architecture, and container management.

B. Overview of Covered Topics: We delved into Docker security best practices, emphasizing container isolation, securing the Docker daemon, securing container images, and managing user permissions. Additionally, we discussed popular Docker orchestration tools like Kubernetes, Nomad, Apache Mesos, and Amazon ECS.

FAQs (Frequently Asked Questions)

As Docker continues to gain popularity, it’s natural to have queries regarding its functionalities. Here are some commonly asked questions and their brief answers:

A. What is the difference between Docker and Virtual Machines?

Docker uses containerization technology to provide lightweight and isolated environments for applications, whereas virtual machines (VMs) emulate full-fledged operating systems with dedicated resources. Docker containers offer faster startup times, efficient resource utilization, and greater flexibility compared to VMs.

B. How can I access files inside a Docker container?

You can access files inside a Docker container by using the “docker cp” command to copy files between the container and the host system. Alternatively, you can mount host directories or volumes into the container to enable seamless file access.

C. Can I run Docker on Windows/Linux/macOS?

Yes, Docker is compatible with Windows, Linux, and macOS operating systems. Docker provides dedicated versions for each of these platforms, ensuring seamless integration and optimal performance.

D. How to secure Docker containers?

Securing Docker containers involves implementing best practices such as container isolation, securing the Docker daemon, ensuring the integrity of container images, and managing user permissions effectively. Regular vulnerability scanning, updating of container images, and enforcing access control policies are also crucial for maintaining container security.

E. How does Docker Swarm compare to Kubernetes for orchestration?

Docker Swarm is Docker’s native orchestration solution, offering basic cluster management capabilities suitable for smaller deployments. On the other hand, Kubernetes provides advanced features, scalability, and a wider ecosystem, making it preferable for managing complex containerized applications at scale.

F. How do I monitor Docker container performance?

Docker provides built-in monitoring capabilities through tools like Docker Stats and Docker Events. Additionally, numerous third-party monitoring solutions, such as Prometheus and Grafana, integrate seamlessly with Docker to provide enhanced performance monitoring and metrics visualization.

With this comprehensive Docker tutorial, you should now have a solid understanding of Docker’s core concepts, security best practices, popular orchestration tools like Kubernetes, and answers to common queries. Start harnessing the power of Docker to simplify application deployment, improve efficiency, and fortify your environment’s security.